Safety Net for Sandbox

Automatically moderate text, shapes, and attachments in your sandbox.

Safety Net, our AI-powered content moderation system, now watches over sandboxes. Like a helpful teaching assistant, it automatically reviews text, shapes, and attachments to keep your collaborative workspaces safe.

How Sandbox moderation works

When you enable auto-moderation, Safety Net checks everything that enters your sandbox. Each piece of text is reviewed when a user finishes typing, and attachments are checked upon upload. If something raises concerns, Safety Net blocks it and notifies you for final review.

Plus, if someone edits previously approved text, Safety Net automatically reviews it again. This means no sneaky edits can slip through—every change gets a fresh look.

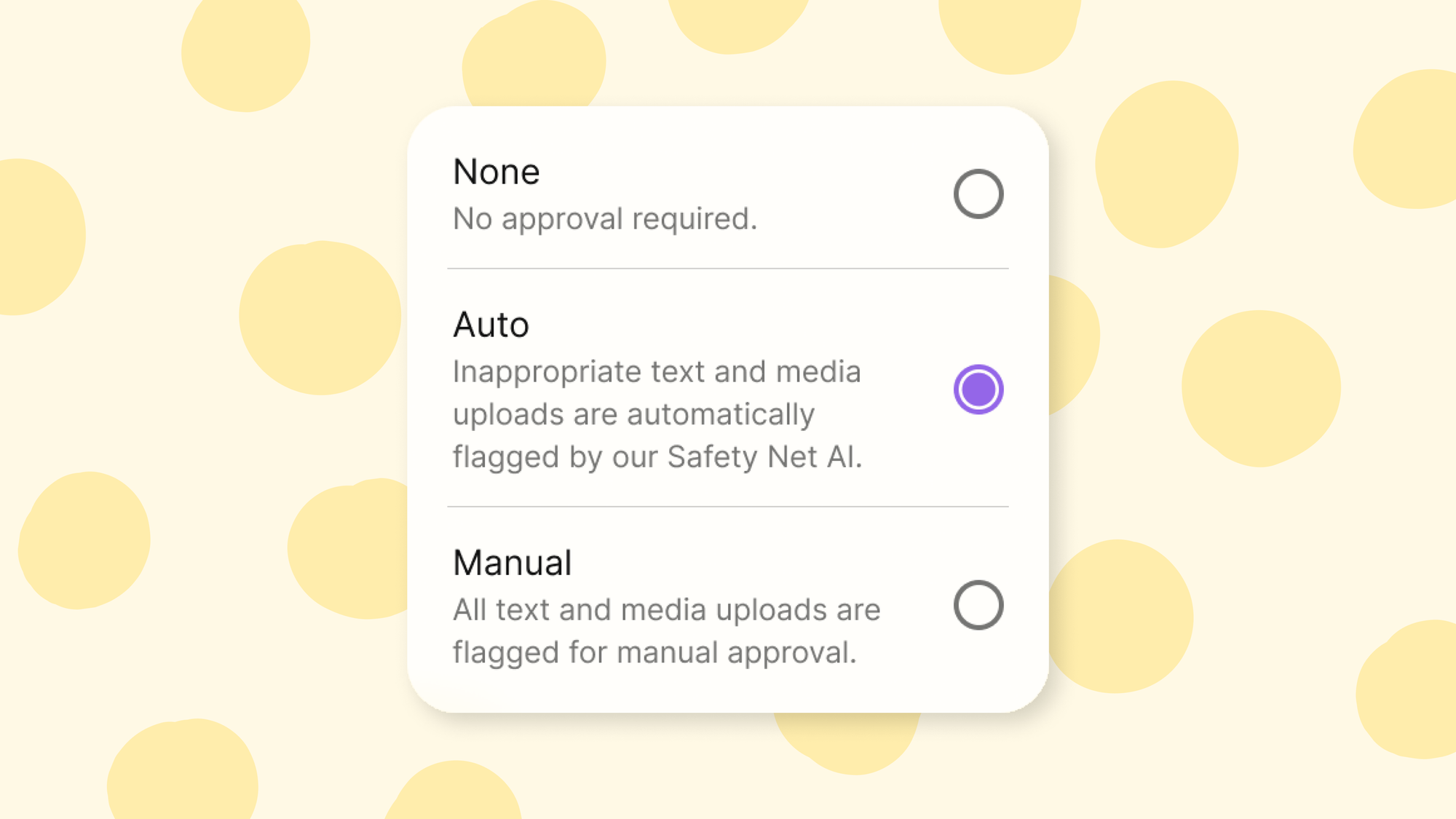

Setting up auto-moderation

- Create a sandbox

- Open the settings panel

- Under Content, go to moderation

- Select Auto from the dropdown menu

That's it! Safety Net will now monitor your sandbox, letting you focus on collaboration instead of content monitoring.

Flexible control

Want more oversight? You can switch to manual moderation at any time. This lets you personally review every addition to your sandbox. Whether you choose automatic or manual, you remain in control of your collaborative space.

Why this matters

Teachers spend precious time monitoring content when they'd rather focus on teaching. Safety Net does the initial screening automatically, flagging potential issues while letting appropriate content through immediately. It's like having a trustworthy assistant watching your sandbox while you teach.

Already using Safety Net on your boards? The same trusted technology now protects your sandboxes, creating a consistent safety layer across all your Padlet spaces.

Start moderating

Safety Net for Sandbox is available now. Enable it on any sandbox where you want intelligent content monitoring. Let it handle moderation while you focus on creating and learning together.